Website crawlability is the cornerstone of effective SEO: if search engines cannot easily access and understand your pages, your content remains invisible. In today’s competitive landscape, leveraging AI-powered SEO audit tools can transform your site’s visibility by diagnosing and fixing crawlability challenges at scale. This guide explains why crawlability matters, common pitfalls, and how to harness AI for actionable improvements.

- Understand what crawlability means and why it impacts your SEO results

- Identify technical issues that block search engine bots

- See how AI SEO audit tools streamline the entire analysis process

- Get step-by-step guidance to optimize your site structure and indexing

- Discover how to maintain crawlability over time for sustainable growth

If you’re ready to automate technical SEO and see fast results, explore our crawlability analysis audit seo page for advanced solutions.

Table of Contents

ToggleUnderstanding website crawlability

What is website crawlability?

Crawlability refers to how easily search engine bots, also known as crawlers or spiders, can access and navigate your website’s content. When a site is crawlable, these bots can efficiently discover, read, and index your pages making them eligible to appear in search results. Inadequate crawlability can lead to pages being ignored, resulting in lost organic traffic and missed business opportunities.

Crawlability is a technical SEO factor ensuring that every important page can be found, rendered, and indexed by search engines.

For example, if your site’s navigation is hidden behind JavaScript or your internal links are broken, essential pages may remain undiscovered. Consistent crawlability analysis helps you detect such issues early, keeping your content visible and competitive.

How search engines crawl and index websites

Search engines like Google deploy automated bots to traverse the web, starting with a list of known URLs. These bots follow links from page to page, gathering data and sending it back to their index for processing. The process involves several steps: crawling (finding pages), rendering (processing page content, including JavaScript), and indexing (adding pages to the search engine’s database).

Factors such as site architecture, robots.txt directives, meta robots tags, and the presence of sitemaps influence how thoroughly your site is crawled. Proper crawlability ensures that your latest content, updates, and structural changes are quickly reflected in search results.

Common crawlability issues affecting SEO

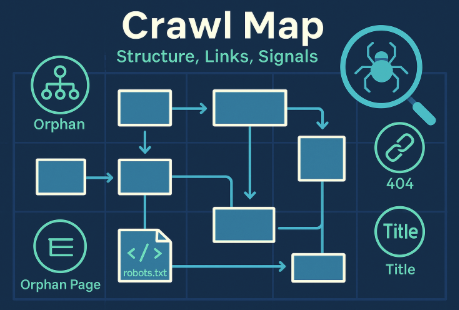

Several technical pitfalls can hinder a search engine’s ability to crawl your website effectively:

- Broken links and 404 errors prevent bots from accessing content

- Incorrect robots.txt or meta robots directives block critical pages

- Duplicate pages dilute your site’s authority and confuse search engines

- Poor internal linking or orphaned pages leave important content undiscovered

- Heavy use of JavaScript or AJAX without proper fallback limits crawler access

Addressing these crawlability issues is essential for search engine optimization. Ongoing auditing and analytics help identify and resolve problems before they impact rankings.

Summary: Website crawlability is vital for SEO visibility. Understanding how bots access and index your content and proactively fixing issues ensures your site performs at its best.

The role of AI in crawlability analysis

How AI-powered SEO audit tools work

AI-powered SEO audit tools automate and scale the crawlability analysis process. Instead of manually checking hundreds of pages, these tools deploy intelligent agents that crawl with advanced algorithms, simulating search engine bots. They analyze site structure, extract custom data, and flag technical SEO issues in real time.

Modern AI audit platforms, such as Hack The SEO, leverage NLP (Natural Language Processing), analytics integration, and custom extraction to surface insights that were previously only accessible to expert consultants. They handle open crawls JavaScript rendering, validation structured data, and even download code search reports for large websites.

AI SEO audit tools bridge the gap between technical diagnostics and actionable recommendations, reducing human error and accelerating fixes.

This automation is especially helpful for sites with frequent updates, multi-language content, or complex authentication saving hours while delivering consistent, high-quality results.

Key features to look for in an AI SEO audit tool

Choosing the right AI SEO audit tool is critical to your technical SEO success. Here are the features that matter most:

- Comprehensive site crawling, including custom javascript and authentication support

- Data review meta analytics integration with Google and other analytics tools

- Duplicate content detection and broken links auditing

- Validation spelling, grammar, and page titles optimization

- Custom extraction, directives management, and visualisations crawl limit controls

- Hreflang attributes validation for international SEO

- Automated reporting, download options, and renew log file history

Look for tools that provide support pricing transparency, code search flexibility, and support training resources to maximize your team’s capabilities.

Advantages of using AI for crawlability analysis

AI brings substantial advantages to crawlability analysis:

- Speed: Automate large-scale audits, reducing analysis time from days to minutes

- Accuracy: Minimize missed errors and false positives by combining AI with analytics integration google

- Scalability: Audit sites with thousands of URLs, dynamic content, or version changes effortlessly

- Actionable insights: Get prioritized recommendations for fixes, not just raw data

- Continuous improvement: Schedule regular audits and receive real-time alerts as issues arise

With AI, technical SEO becomes more accessible, enabling teams to focus on strategy instead of manual diagnostics.

Summary: AI-powered tools streamline crawlability analysis by automating complex tasks, delivering faster, more accurate results, and helping you prioritize high-impact improvements.

Step-by-step crawlability analysis with AI tools

Initial site crawl and data collection

Every successful crawlability analysis begins with a comprehensive site crawl. AI-powered SEO tools start by crawling your entire website, respecting robots.txt directives and authentication requirements. This first pass gathers essential data: page URLs, page titles, meta descriptions, response codes, and internal link structure.

The process uses advanced crawling algorithms and custom javascript support to access all public and protected areas of your site. The resulting dataset forms the foundation for detailed analysis, allowing you to identify both obvious and hidden technical SEO issues.

Identifying and prioritizing crawl errors

Once the initial crawl is complete, the tool’s auditing engine scans for crawl errors. Common problems include broken links, 404s, redirects, and nofollow tags that may unintentionally block important pages. The platform uses analytics integration google and custom extraction to prioritize errors based on impact.

- High-priority: Errors that prevent bots from reaching revenue-driving pages

- Medium-priority: Issues that reduce crawl efficiency (e.g., unnecessary redirects, duplicate directives)

- Low-priority: Minor validation spelling or grammar inconsistencies

Automated reporting and downloadable checklists help you delegate fixes and track progress efficiently.

Detecting duplicate content and broken links

Duplicate content and broken links are two of the most damaging crawlability issues. AI SEO audit tools use custom extraction and exact duplicate pages detection to surface problematic URLs. They flag broken links in page titles, navigation, and within content enabling you to quickly repair or redirect.

Eliminating duplicate pages and fixing broken links improves both user experience and search engine optimization.

Ongoing analysis ensures new content updates do not introduce further errors, helping you maintain a clean, crawlable site.

Evaluating robots.txt, meta robots, and sitemaps

AI tools automatically audit your robots.txt file, meta robots tags, and XML sitemaps. They check for incorrect directives, blocked resources, or missing URLs that could hinder crawling. Automated validation structured data ensures that all indexing signals are correctly implemented.

For international sites, hreflang attributes are checked for consistency to support global SEO strategies. The platform may offer guide tutorials issues, support pricing options, and renew log file tracking for compliance and transparency.

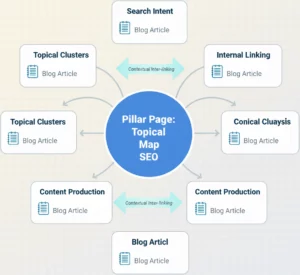

Analyzing site architecture and internal linking

A logical, well-structured site architecture boosts crawl efficiency. AI-powered audits map your site’s internal linking, flagging orphaned pages, and identifying opportunities for improved navigation. The platform can analyze custom javascript menus, version control, and even open crawls javascript rendering to ensure all content is accessible.

Visualisations crawl limit and analytics provide actionable insights to optimize the user journey and search engine pathing.

Summary: Step-by-step AI-driven crawlability analysis reveals critical technical SEO issues, from crawl errors to duplicate pages, ensuring your site is fully accessible to search engines.

Optimizing website crawlability post-analysis

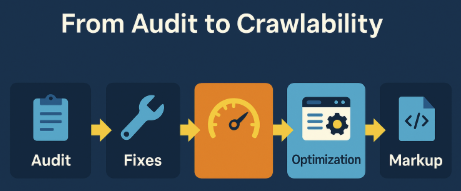

Fixing technical SEO issues uncovered by the audit

Post-audit, your priority is to address technical SEO issues flagged during the crawlability analysis. These may include resolving broken links, updating or removing nofollow tags, fixing incorrect directives, and consolidating exact duplicate pages. AI-powered platforms often provide actionable, prioritized lists making it easy to delegate tasks across your team.

Quick resolution of these issues improves search engine access and prevents future indexing problems.

Improving site speed and mobile-friendliness

Site speed and mobile-friendliness are essential for crawlability and user experience. AI audit tools measure load times, flag slow resources (like heavy javascript), and suggest optimizations. They also test mobile layouts for responsive design, ensuring bots can properly render and index all content.

Improved performance leads to higher crawl frequency and better search rankings.

Optimizing on-page elements for better crawling

On-page elements such as page titles, headings, meta tags, and structured data directly influence how search engines interpret your content. AI SEO tools automate validation spelling, grammar, and data review meta checks, ensuring every page is optimized for discovery.

Properly optimized on-page elements boost both crawlability and search engine rankings.

Use analytics to track improvements and iterate as needed.

Enhancing structured data and schema markup

Structured data helps search engines understand your content and display rich results. AI SEO audit tools validate schema markup, flagging errors and offering suggestions for better implementation. They support complex validation structured data, hreflang attributes for multilingual sites, and custom extraction for unique content types.

Correct schema markup increases visibility in SERPs and supports services search engine features.

Summary: After analysis, focusing on technical fixes, speed, on-page elements, and structured data ensures your site remains fully crawlable and search-friendly.

Monitoring and maintaining crawlability over time

Setting up regular automated crawl audits

Crawlability is not a one-time task ongoing monitoring is essential. AI-powered tools allow you to schedule automated crawl audits at set intervals, using renew log file functionality to maintain a history of changes and improvements.

Regular analysis helps you catch new issues before they affect rankings and ensures your site evolves in line with best practices.

Tracking changes and measuring improvements

To measure progress, track key metrics integration like the number of crawl errors, index coverage, and site speed over time. Analytics integration with Google and other platforms lets you correlate crawlability improvements with organic traffic growth.

Automated reports and downloadable analytics make it easy to share results with stakeholders and demonstrate ROI.

Integrating crawlability checks with ongoing SEO strategies

Crawlability should be a core component of your ongoing SEO strategy. Integrate regular crawl audits, technical fixes, and on-page optimization into your workflow. Guide tutorials and support training offered by your AI SEO tool can help your team stay current with evolving search engine requirements and directives.

This proactive approach ensures your site remains competitive in fast-changing digital markets.

Summary: Consistent monitoring and integration of crawlability analysis into your SEO strategy leads to lasting improvements, increased traffic, and sustained search engine visibility.

FAQ

What are the most common crawlability issues websites face?

The most frequent crawlability issues include broken links, incorrect robots.txt directives, duplicate content, missing or misconfigured sitemaps, and heavy reliance on JavaScript for navigation. These problems can block search engines from indexing your pages, hurting your SEO performance.

How often should crawlability analysis be performed?

Regular crawlability analysis is recommended at least once a month, or whenever you launch new content, redesign your site, or implement major changes. Automated tools with renew log file features make it easy to keep track of your site’s health over time.

Can AI tools replace manual SEO audits?

AI SEO audit tools automate many technical checks and data review meta tasks, speeding up the process and reducing human error. However, human expertise is still valuable for interpreting results, customizing strategies, and handling unique website requirements. The best approach combines both.

What metrics indicate improved crawlability?

Improved crawlability is reflected in increased index coverage, reduced crawl errors, faster page load times, and higher organic search traffic. Monitoring analytics integration helps you visualize progress and spot areas for further optimization.

Is crawlability important for all types of websites?

Yes, crawlability is essential for all websites regardless of size or industry. Even small sites can suffer from technical issues that block search engines. Regular crawlability analysis ensures your content is discoverable and ranks well in search results.

Accelerate your SEO success with AI-powered crawlability analysis

Improving your website’s crawlability is the foundation of technical SEO. With AI-powered audit tools like those from Hack The SEO, you can automate complex analysis, prioritize critical fixes, and maintain a healthy, visible site with minimal manual effort. The result: higher search engine rankings, more organic traffic, and a sustainable growth advantage.

- Schedule regular automated crawl audits for ongoing site health

- Leverage AI to detect and resolve technical SEO issues instantly

- Optimize site architecture, on-page elements, and structured data

- Monitor key metrics and adapt strategies for continuous improvement

- Empower your team with actionable insights and expert support

Ready to transform your SEO workflow? Embrace AI-driven crawlability analysis and unlock your site’s full potential with Hack The SEO’s advanced solutions.

Eric Ibanez

Co-fondateur de Hack The SEO

Eric Ibanez a créé Hack The SEO et accompagne des stratégies SEO orientées croissance. Il est aussi co-auteur du livre SEO pour booster sa croissance, publié chez Dunod.

Suggested Articles