Automated AI agents are set to flood the web with unprecedented crawling activity, warns Google’s Gary Illyes. As AI adoption surges, SEO professionals face new challenges in managing crawl budgets and maintaining site performance.

Table of Contents

ToggleGoogle Issues Clear Warning on Web Crawling Surge

In a recent episode of Google’s official _Search Off the Record_ podcast, **Gary Illyes** from the Search Relations team issued a stark warning: the web is facing an **unprecedented surge in crawling activity**, fueled by automated AI agents.

🎙️ “Everyone and my grandmother is launching a crawler… The web is getting congested… but it’s designed to handle it.”

Gary Illyes, Google

The message is clear: technical SEO is entering a new era, where crawling and server resource management are no longer back-end concerns they’re business-critical.

Real-World Impact: AI Bots Are Flooding Websites

AI agents are now widely used for:

-

Content creation (via LLMs and AI writing tools)

-

Competitive intelligence and reverse engineering

-

Market research and pricing data collection

-

Dataset building for machine learning models

➡️ Each tool launches its own crawler, rapidly multiplying automated traffic across the web.

🧪 Case Example from SEO Agency “X Metrics”:

A mid-size tech media site saw its bot traffic multiply by 6x over two months, with a 400% increase in undocumented AI crawler hits. The result: server slowdowns, delayed indexing, and recurring 502 errors during peak loads.

AI Agents Set to Drive Unprecedented Web Crawling

Gary Illyes of Google’s Search Relations team warns that the rise of AI agents and automated bots will significantly increase web traffic. In a recent “Search Off the Record” podcast, Illyes remarked, “everyone and my grandmother is launching a crawler,” highlighting the widespread deployment of AI-driven tools. These agents are being used for content creation, competitor analysis, market research, and data collection, all of which depend on large-scale website crawling.

The rapid expansion of AI-driven tools is expected to further congest the web. As each new tool requires its own data pipeline, server requests are multiplying. Illyes emphasized, “The web is getting congested… It’s not something that the web cannot handle… the web is designed to be able to handle all that traffic even if it’s automatic.” However, for site owners and SEO managers, the concern is less about web infrastructure and more about the strain on individual sites and the complexity of traffic management.

“The web is getting congested… It’s not something that the web cannot handle… the web is designed to be able to handle all that traffic even if it’s automatic.”

Google’s Unified Crawler System: Implications for SEO

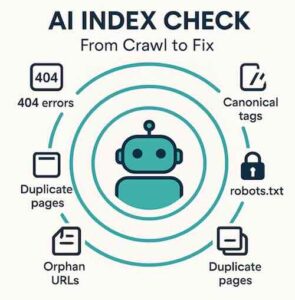

Google’s infrastructure uses a unified crawler for all its products, including Search, AdSense, and Gmail. Each identifies itself with a distinct user agent, but all follow the same robots.txt and server health protocols. This means that a single technical approach manages both search indexing and other automated Google services, scaling back when sites encounter issues.

Illyes explained, “You can fetch with it from the internet but you have to specify your own user agent string.” For SEO professionals, this underscores the importance of accurate robots.txt configuration and server monitoring, as mismanagement can impact not just organic search but other Google services as well.

Crawling vs. Indexing: Where the Real Resource Drain Lies

Contrary to common SEO belief, Illyes argues that crawling itself is not the main resource consumer. He stated, “It’s not crawling that is eating up the resources, it’s indexing and potentially serving or what you are doing with the data.” The implication: fetching pages puts minimal load on sites compared to the computational demands of processing and storing data.

For those focused on crawl budget, this perspective suggests a shift in optimization priorities. Rather than solely limiting crawl rates, SEO teams should also consider how their sites’ structure and content affect indexing and data storage.

Web Growth and Persistent Congestion: A Historical Perspective

The scale of the web has exploded since the 1990s. In 1994, World Wide Web Worm indexed just 110,000 pages, and WebCrawler reached 2 million. Today, single websites can surpass millions of indexed pages, demanding more advanced crawling protocols—from HTTP 1.1 to HTTP/2 and the emerging HTTP/3.

Despite ongoing efforts to reduce Google’s crawling footprint, new AI products are constantly increasing demand. Illyes noted, “You saved seven bytes from each request that you make and then this new product will add back eight.” Each efficiency gain is quickly offset by rising traffic from novel AI applications.

What This Means for SEO Strategy and Site Management

The surge in AI-driven crawling introduces new complexity for SEO professionals. Increased automated traffic can affect server resources, skew analytics, and complicate traffic management. As AI adoption accelerates, site owners will need robust server infrastructure and refined robots.txt management to differentiate between valuable and redundant crawler activity.

Optimization priorities may need to shift, with greater emphasis on monitoring server health, managing indexing, and assessing the real impact of automated traffic on site performance and analytics.

In summary, the web’s congestion from AI agents is poised to reshape technical SEO and site management. Ongoing vigilance and adaptation will be essential as the landscape continues to evolve.

🔗 Further Reading & Official Resources

Eric Ibanez

Co-fondateur de Hack The SEO

Eric Ibanez a créé Hack The SEO et accompagne des stratégies SEO orientées croissance. Il est aussi co-auteur du livre SEO pour booster sa croissance, publié chez Dunod.

Suggested Articles